Feature Engineering

09/28/2022

Robert Utterback (based on slides by Andreas Muller)

Feature Engineering

Coming up with features is difficult, time-consuming, requires expert knowledge. "Applied machine learning" is basically feature engineering.

- Andrew Ng

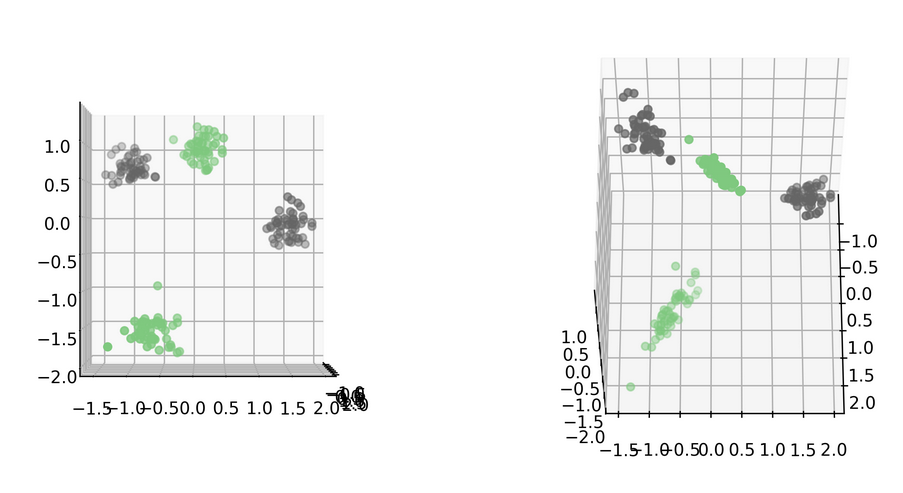

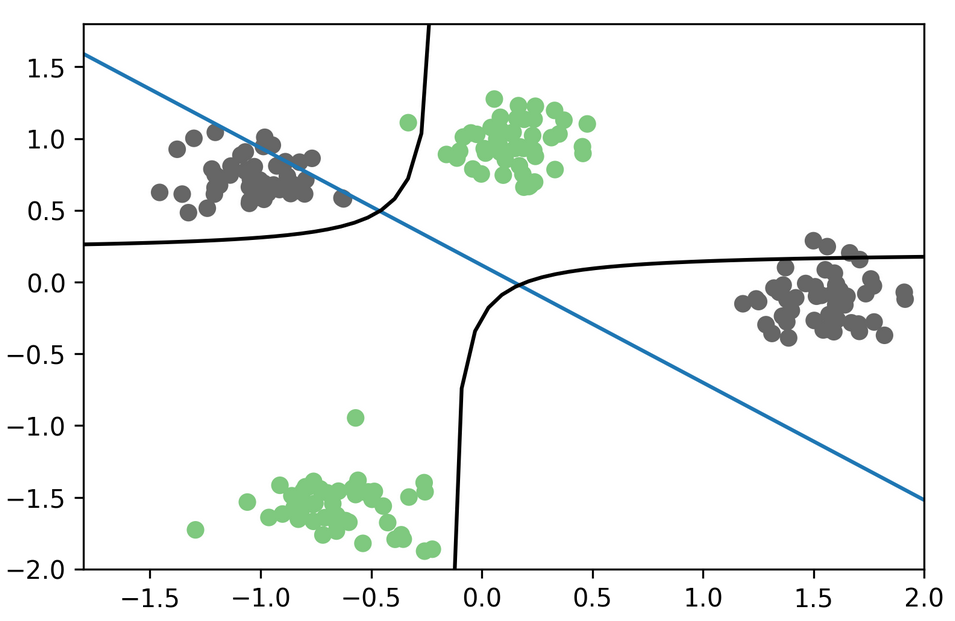

Interaction Features

Interaction Features

X_i_train, X_i_test, y_train, y_test = train_test_split(

X_interaction, y, random_state=0)

logreg3 = LogisticRegressionCV().fit(X_i_train, y_train)

logreg3.score(X_i_test, y_test)

0.960

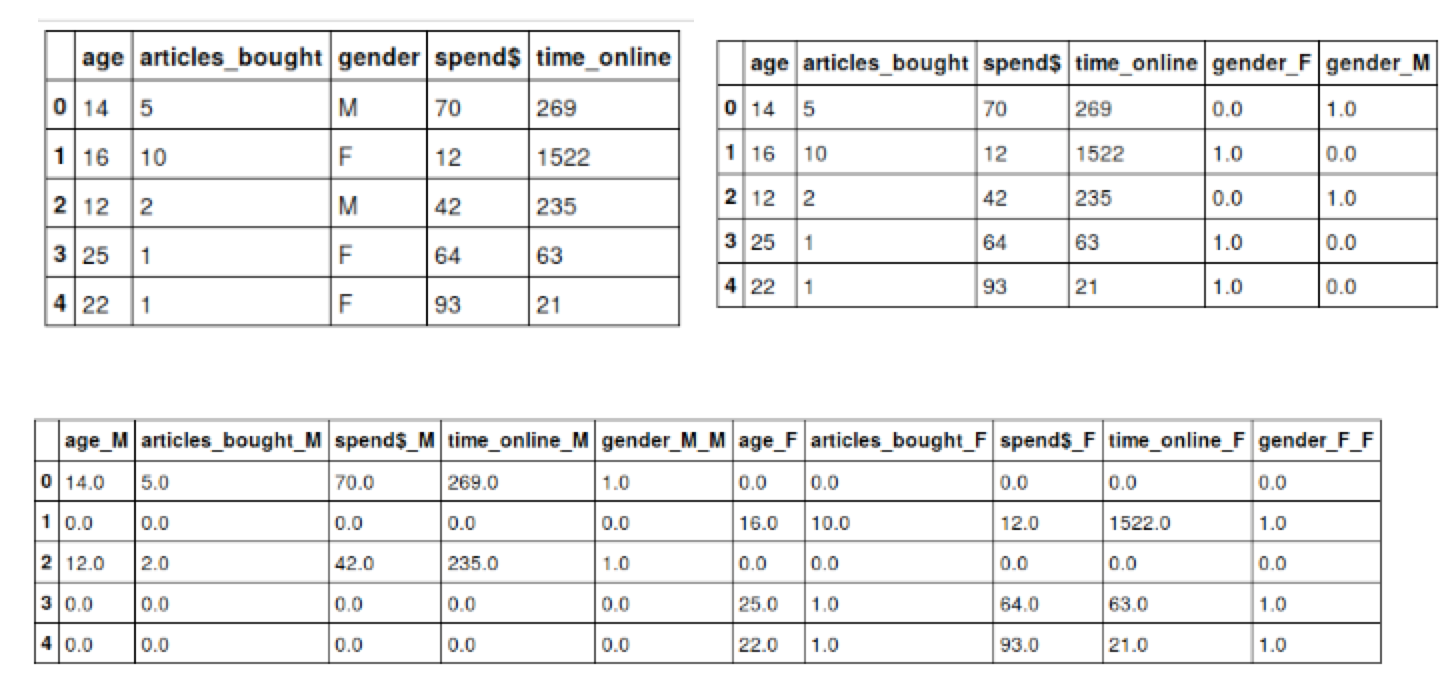

- One model per gender!

- Keep original: common model + model for each gender to adjust.

- Product of multiple categoricals: common model + multiple models to adjust for combinations

More Interactions

age articles_bought gender spend$ time_online + Male * (age articles_bought spend$ time_online ) + Female * (age articles_bought spend$ time_online ) + (age > 20) * (age articles_bought gender spend$ time_online) + (age <= 20) * (age articles_bought gender spend$ time_online) + (age <= 20) * Male * (age articles_bought gender spend$ time_online)

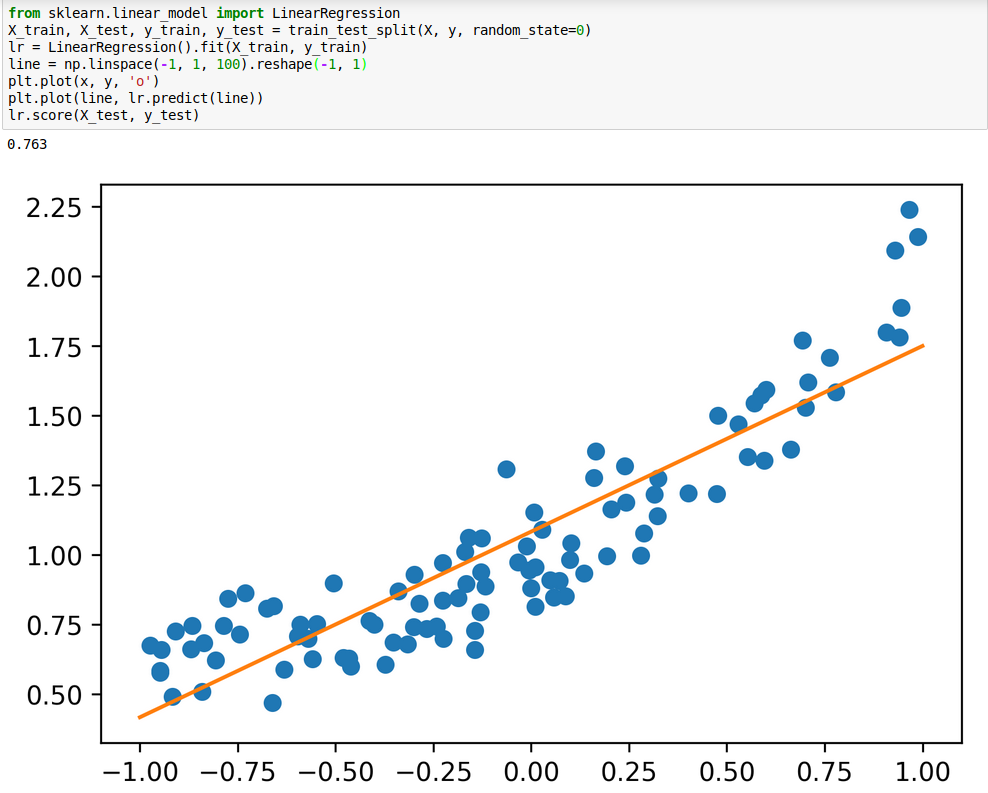

Polynomial Features

Polynomial Features

Polynomial Features

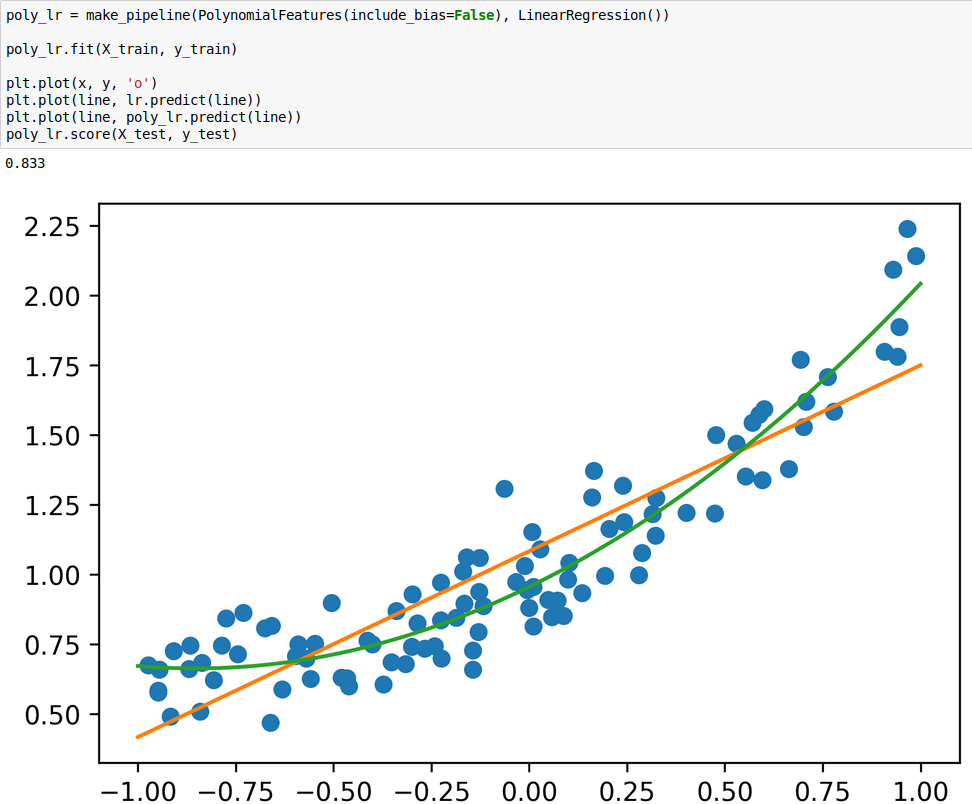

PolynomialFeatures()adds polynomials and interactions.- Transformer interface like scalers etc.

- Create polynomial algorithms with

make_pipeline!

Polynomial Features

from sklearn.preprocessing import PolynomialFeatures

poly = PolynomialFeatures()

X_bc_poly = poly.fit_transform(X_bc_scaled)

print(X_bc_scaled.shape)

print(X_bc_poly.shape)

(379, 13) (379, 105)

scores = cross_val_score(RidgeCV(), X_bc_scaled, y_train, cv=10)

print2(np.mean(scores), np.std(scores))

(0.693, 0.111)

scores = cross_val_score(RidgeCV(), X_bc_poly, y_train, cv=10)

print2(np.mean(scores), np.std(scores))

(0.829, 0.071)

Discretization and Binning

- Loses data.

- Target-independent might be bad

- Powerful combined with interactions to create new features!